Bluesky: On community trust & safety

This is a response to the June 24 proposals, and while it contains other thoughts, is not a complete picture of my views on moderation. I'm over 3,000 words at this point though, so keeping things in a limited scope. These are also my personal views and recommendations, for discussion/consideration and not really as any kind of gospel.

Links for discussion at the very bottom to the GitHub issues I've raised related to the proposals. And this post is the one I made discussing/announcing if you want to promote (or dunk).

Over the past few weeks, I have been heavily engaged with Bluesky - far more so than any social network since the early days of Twitter. The technology, community, and level of activity reminds me of early experiences with IRC, Usenet, and the 'early' web of loosely connected blogs, GeoCities sites, and message boards.

And I can't help but shake a feeling that the same doom is lurking.

A point in time

Bluesky is built on ATProto and for the time being those are synonymous. The Bluesky app and service are the only instance (at least, of consequence) and the platform has been driven by user-to-user invitation. At its core though, ATProto is a distributed network, designed for federation, independence, and built in-the-open as open source.

This is a moment in platform history that cannot be recaptured. Decisions made at this stage will not only inform the primary gateway to the service (i.e. https://bsky.app/), but will echo through all future instances as the model for management, deployment, and community.

The Bluesky team appears very aware of this responsibility, and is engaging closely with the community. But at some point - likely some point soon - the goals of the platform will begin to diverge from the goals of the communities that rely on that platform.

Community

Bluesky has become a de-facto home for a mix of marginalised communities and progressive values, driven by the invitation process. These communities have thrived; not only due to the presence of like-minded individuals, but due to the absence of aggressive adversaries. Posts 'feel' safe as there isn't an army of nay-sayers waiting in the wings, and posting on issues such as trans rights does not attract an army of aggressive alt-right replies.

That is, the chilling effect you see on sites like Twitter is not present, and individuals can express themselves more freely than they have in years.

And where there are these forces, communities have found ways to self-manage. Mute lists are used to identify and flag harmful accounts for others, specific community members are looked to as trusted 'protectors', and calling out bad behaviour is generally safe and normalised.

The danger to marginalised communities is that as the platform grows, twin threats emerge: the mainstream instance must begin to balance competing community needs, and alternate instances will appear - some of which will contain large groups actively hostile to those that found a home on the service.

The need for moderation

A public square is crucial.

A reasonable-sounding proposal would be to move those communities to more private spaces. But in doing so, some groups are effectively denied representation and access to public life. Those looking for community will be unable to find it without effort, and those on the outside of those private support networks will be isolated and far more vulnerable.

For the same reason, I don't believe we should seek to implement private spaces on top of the existing application. The danger is further marginalising groups by making them invisible to the mainstream, tucked away in private little corners. We need a level of separation without isolation.

Direct messaging, groups, and private threads pose a danger to normalisation and acceptance if they are adopted by small groups as the only way to use the platform safely and without fear of harassment.

The Tech Bros

That requirement for moderation is first major point of friction.

There is a group of technology-first users that believe that any moderation is censorship and that the platform should focus solely on the tech.

This group is fundamentally dangerous to the idea of community. Censorship-resistant in this context is equivalent to forced interaction with those that wish you harm. The lure of ATProto for this group is that it can be wielded to progress ideas that are implicitly or explicitly hurtful, in a way that cannot be progressed in mainstream environments. Their worldview is often that 'getting your feelings hurt' is never valid - never mind the chilling effect on freedom of expression if you can't express human vulnerability without someone suggesting you kill yourself.

This focus on technology over people is, in my experience, driven primarily by those for whom 'getting your feelings hurt' is the worst possible thing that can happen to them online. They have not been exposed to doxxing campaigns, hateful brigading, constant harassment because of who they are or what they represent.

While anti-censorship is a laudable goal for activists, organisers, and others who may find themselves suppressed by mainstream platform; this is not the same as moderation that is primarily community driven and community desired.

The company is a future adversary

Thankfully, the Bluesky team appears cognizant of the danger. Real discussions have occurred on the impact of (oft-unasked for) moderation roles on people.

But the goals of the community and the goal of the platform will here necessary diverge. The company isn't a future adversary, it is a current adversary - at least to some. Difficult, binary, decisions need to be made in the near term that will set a tone and alienate existing groups.

The proposals by the team all contain an element of unavoidable conflict, and the below are my thoughts on their implementation. This includes recommendations about how users may interact with the feature, should it come to pass.

I'm not going to go deep into the technology side - I am assuming that all proposals and recommendations are possible, but this may not be the case. None of these are really designed to be a fait accompli, but rather a collection of thoughts for discussion.

I am also only talking about moderation, community management, and safety. Lists, as an example, have significant uses beyond moderation (such as curating the science feed I developed), but this document is going to be huge already.

User Lists, Reply-Gating, and Thread Moderation

ATProto is fundamentally a public system (you can read more about what information is/isn't private in this document by @eepy.bsky.social). Lists - including block and follow lists - are public. Posts are public.

Moderation therefore, must be public.

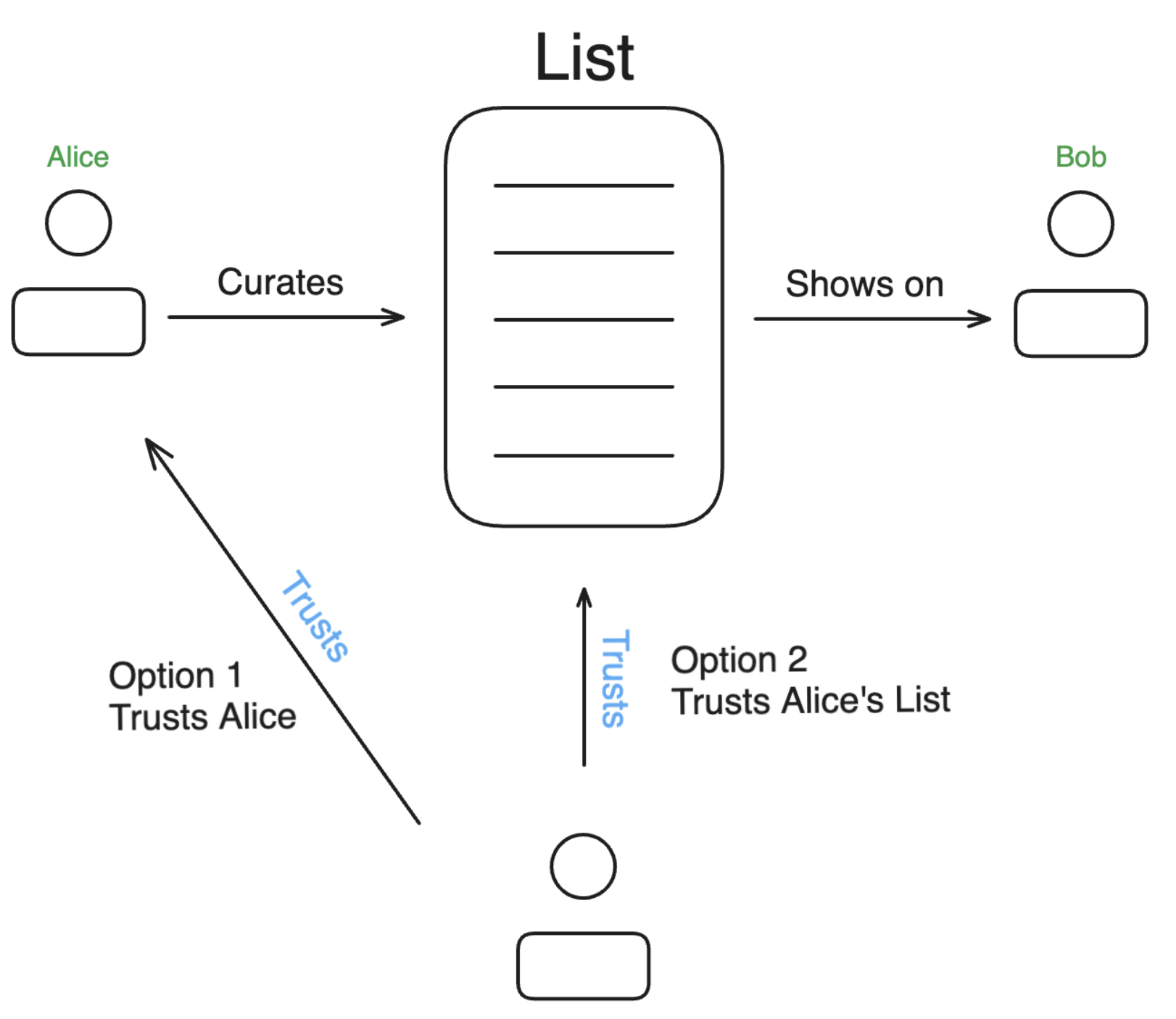

Trusted Lists

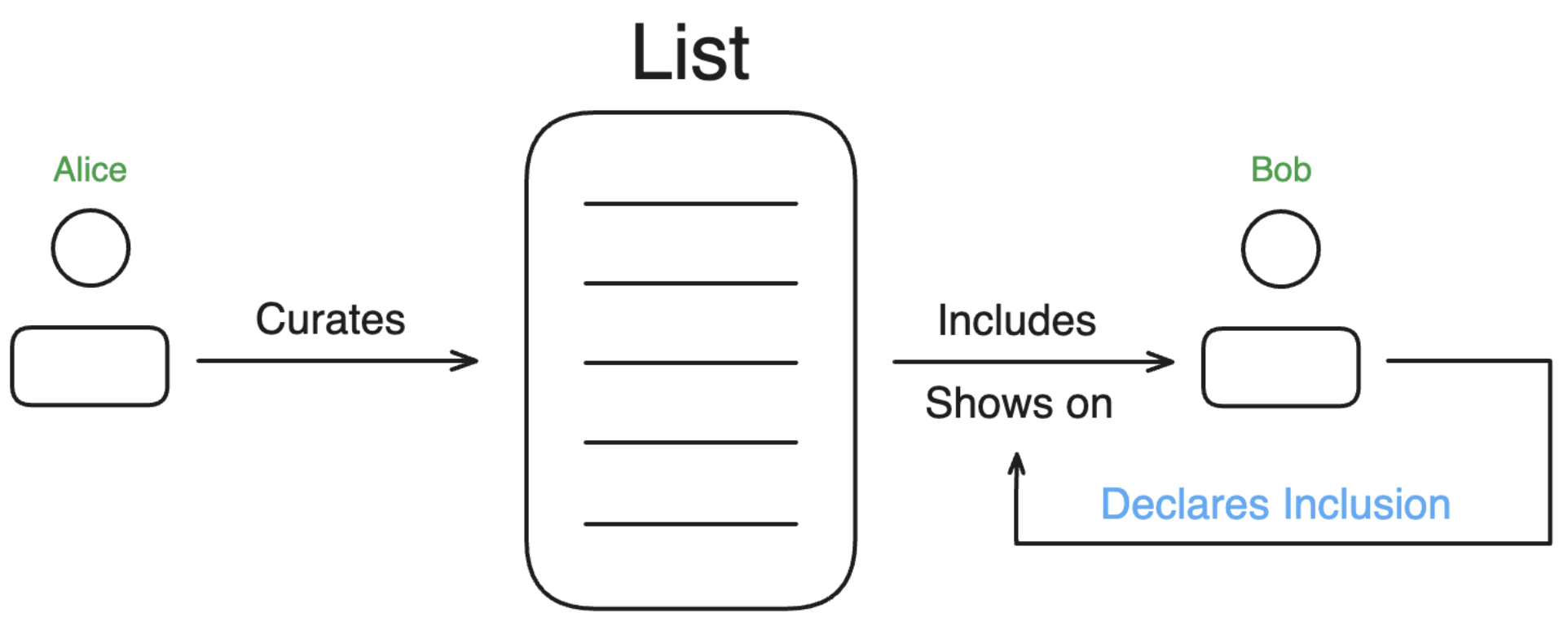

The proposal for 'Trusted Lists' is effectively the system that exists now, formalised.

In this model, a trusted community member (or perhaps members) is responsible for a list of 'bad actors' (or 'good actors') for muting/blocking/other community action. In this scenario, a lot of weight is placed upon Alice to 'do the right thing', and the process for managing and maintaining the list is open to both intentional and unintentional abuse.

Calls for inclusion are often public, which serves both to notify Alice but also to allow the community to come to a rapid and joint decision. In some ways, this is essential to the integrity of the process, and while the call-out itself is often a form of harassment, it is done in a way that highlights the bad behaviour. Advocates for both sides can have a public discussion.

At the end of this process however, one side will leave with animosity for Alice, and over time this is a contingent of the social space that has both demonstrated bad behaviour, and now has a specific target for resentment. This is made substantially worse in cases where a community is somewhat divided, as a slow eroding of trust is likely to isolate Alice as a 'capricious' arbiter. Given that the list holder is often a core community member - this is how factions fall-0ut and fall apart.

Anonymous lists and group moderation simply move the target from Alice, to the more general 'community', which has the potential to be somewhat worse as guilt-by-association kicks in.

Recommendation: Should this model be considered, significant care must be taken to ensure that the list holder does not become a pariah, defeating the purpose of the process. This can be done at a community level (i.e. some kind of voting in a joint list), or a technology level (i.e. implement list proposals voted on by list users).

Declared Memberships

Declared membership groups are likely to be poison to community-building.

Should they be used to determine a list of 'good actors', I predict that we will immediately see in-group/out-groups form, and the notion of the public square largely dissolve except for the most banal of discussion, or for the conduct of hate speech intended solely for provocation.

Recommendation: As a model for moderation, declared memberships should not be considered. They may have utility for allocating 'committee' membership for list voting (such as in 'Trusted Lists') but I believe the risk of these leading to fractioning is significant.

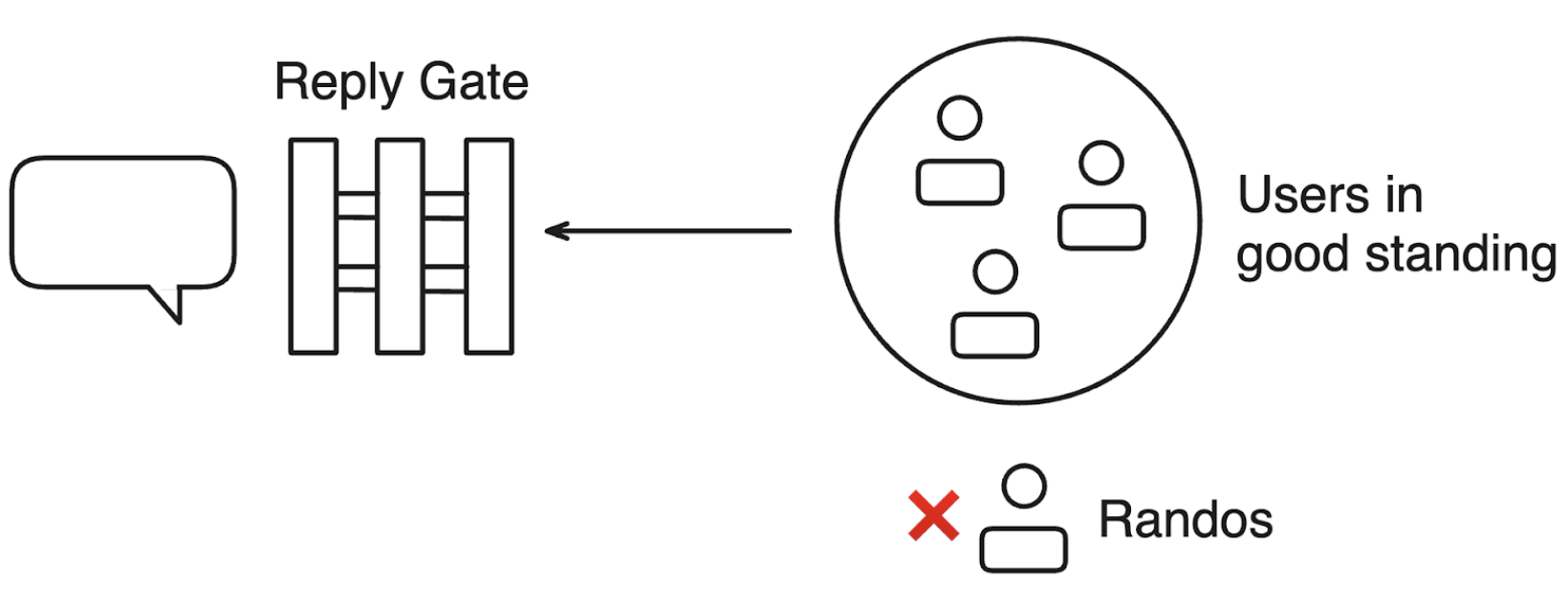

Reply Gating

Reply gating (to only people I follow, or only people on a list, or only people not on a list), is a good idea. This allows for targeted communication controlled by the end user, and adds a barrier to harassment.

However, at a cultural level, too many gated posts to an overly-limited audience will be a major impediment to inclusion. And using lists moderated by others for gating has similar drawbacks to overall trusted list management.

We have seen on other sites that quoting and screenshots will be used to attack people over gated comments, which implies that in use this will serve to set some guardrails, but not be a panacea.

Recommendation: Reply gating is probably a net good. Should they become standard community practice, the system should be revisited either at a platform level or at a community level.

Thread Moderation

When I said there will be binary choices ahead that will frame the company as an active adversary - thread moderation is the specific area I had in mind.

Ultimately any manipulati0n of threads and reply chains comes down to one question: Who owns the reply content under a post?

Allowing deletion is likely the most straightforward answer to dealing with harassment online. If someone is creepy in replies, simply delete it. However, this kind of curation brings a significant downside: if the reply is correcting misinformation, or making a remark that goes against the initial poster's malicious intent - that poster has total control of the narrative.

Imagine a scenario where false state propaganda is posted, and every contrary reply is deleted. Even within a relatively small network, a post with 50 affirming replies is very powerful at pushing a message especially if the presence of deleted activity is completely 'behind the scenes'.

Reply hiding addresses this a little, but creates an 'attractive nuisance' situation where hidden replies are available to end users and there is a temptation to see 'what they are hiding'. In this instance, it may help to deal with creeps in replies, but not necessarily address misinformation that is posted to replies - instead feeding a suppression narrative.

Neither option is ideal, but reply hiding seems less bad because most users will see a reply via existing trust relationships (i.e. followers, etc.) and it's reasonable to assume that they will respect a poster's wishes. (At least, reasonable compared to the average visitor.)

Allowing threads to be locked can be treated similarly to reply gating in terms of moderation - where a reply is simply gated-off from all users. As a tool for 'settling' a thread, or just opting out as a poster, I think they're a good idea.

Recommendation: Allow reply hiding, but not deletion. Do thread locking.

Labelling and Moderation Controls

Labelling seems, prima facie, like a straightforward solution. Allow end users to label their own posts (to allow other users to self-select), and have systems in place for mass moderation and flagging.

The complexity of the proposed label set, and its potential for the misuse of a Labelling Service gives me pause. As does the balance of categories.

Labelling Services for Harassment

The proposal does not address the use of a labelling service operating on a federated server that is used for harassment. For example, a service could tag everyone from a particular server, or connected to a particular user, with one of the tags, and subscribers could use that to single out and harass posters.

This is significantly worse than lists, as lists are public, and moderation controls (up to defederation) can be used to defend against it to some degree. However, it does not appear that Labelling Services will be accountable. How do we know which users are using a malicious service, or which flags our content has been given by services we don't use?

The unfortunate reality is that this will be weaponised almost immediately.

Recommendation: Reconsider this design to include some kind of trust requirement in order to be labelled in the first place, perhaps just completely hiding things where a poster does not have an opt-in trust relationship with a service.

Balance of Categories

I don't believe the labels proposed adequately separate network concerns from human concerns, nor do they adequately provide an 'escalation gradient'.

The mix of 'scam' and 'net-abuse' into a misinformation category, with 'spam' and 'clickbait' in another I don't believe provides enough separation for a user to consider category-wide blocks. These may be grouped differently in a UI, but the grouping as-proposed encourages unblocking 'Misinformation' in order to see 'unverified' information (which may be breaking news).

The presence of an 'Unpleasant' category is also of concern - the majority of those labels are very subjective (i.e. 'bad-take') and will strongly encourage their use only as meme or unserious category markers, or ways to bully and harass users by applying the 'tiresome' marker to them.

Depending on implementation, the more subjective categories are an avenue for deniable abuse. For example, if a very right-leaning service were to mark all sex workers as 'shaming' such that they could be harassed when they appear on feeds.

Recommendation: Vastly simplify the list, and favour objective measures esp. for labels that are not applied by the poster.

Hashtags

I like the majority of the proposal, but it appears less well-developed than the others. Ultimately I would prefer a simple implementation of tags as metadata, made available through the API, and then a wait-and-see regarding what the community demands.

Other considerations

There are other means to achieve similar outcomes, that may be less difficult to manage, or less open to abuse. I have some thoughts, but two examples are:

- A method to identify users that have been blocked by a large portion of those you follow and provide a warning in the UI would go a long way to identifying those that your community feels are potentially bad actors. This kind of approach leverages the existing social graph, provide a means to forewarn users, and does not require active management of a 'bad actor' list.

- Indicate social 'distance' such as 'not followed by my followed' in order to indicate how potentially friendly someone is, and easily bring them 'into the fold' (or not) by following. This wouldn't need to mechanically affect interactions, but rather indicate that this person may be outside your community norms.

There is a lack of tools in-app to visualise the social network. Things we rely on in real life (such as when you meet someone at a specific event) are not present, so we should find ways to express similar concepts to help people both find each other, and avoid communities they don't want to interact with.

Conclusion

This is way longer than intended (almost 3,000 words). Ultimately, I was looking to get my own thoughts together about how I felt about the proposals that went up today, and I hope that it provides some amount of valuable input.

Ultimately I believe that the success or failure of the platform will not be driven by technology. The technology is, more or less, complete 'enough' that people are forming grouping and communities within it. The real danger posed is when those communities are suddenly exposed to ten times the number of people as will happen when the beta period ends, or when federation starts up officially.

In order to keep a level of coherence and engagement, and to preserve the freedom of expression on the platform, we need effective moderation and that needs to come from a combination of some better platform tools, but primarily from communities advocating for the outcomes that they want.

Further Discussion

If you have read through all this - I really do appreciate, and very happy to take any feedback. I hope these are helpful ideas in shaping the network, and the people that are going to live & work amongst it, but even if they're not, I hope they're a jumping off point for constructive discussion.

This post is the one I made announcing all this if you want to promote (,complain, or dunk). I can be reached at https://bsky.app/profile/bossett.bsky.social and I have put these together as GitHub issues related to the proposal, those links below.